}

}

Automating SEO in a Publishing Business

Published 2024-04-22

Today, we’ve asked Audience Development Strategist, Caitlin Hathaway, to explain the challenges and solutions encountered in a publishing business when automating and improving their SEO initiatives. She'll also be joining our expert panel in our upcoming webinar: From Crawling to Content: SEO for News & Publishing Sites

Not long after I began my role at MVF, it became clear that our SEO team was getting bogged down in manual, repetitive tasks – from monthly content audits to assessing algorithm updates. With limited resources stretched across many websites, prioritisation became increasingly difficult.

We needed to change the way we worked.

This article will give you insight into the SEO challenges we faced as a publishing business and the changes we made in order to work more efficiently, while still delivering quality outcomes. I’ll also share a couple of the key automated solutions we created so that other publishers can achieve similar relief.

Contents:

- Background and SEO team structure

- Challenge 1: Prioritising SEO initiatives within a single SEO team

- Solution: Specialised SEO sub-teams

- Challenge 2: Time spent performing manual content SEO checks

- Solution: Automated content SEO checks

- Challenge 3: Training content teams on new in-house SEO standards

- Solution: Content SEO workshops

- The Results

- Responding to Google Algorithm Updates as a Publishing Business

- Automating Algorithm Tracking with Multiple Brands

- The Results

- Final Thoughts

Background and SEO team structure

I work at MVF, a media and marketing company managing a portfolio of owned brands, including WebsiteBuilderExpert and Tech.co.

SEO sits in the publishing department, situated close to the heart of all our brands’ content teams. We’re all responsible for the performance of our brands and collaborate closely.

Each brand has its own content team consisting of an Editor, content managers, and writers, with freelancer writing support.

We have a dedicated tech team for the publishing department. Their work is focused on organic brands, and we liaise with them on fixing issues, as well as developing new components for our brands that enhance our organic presence.

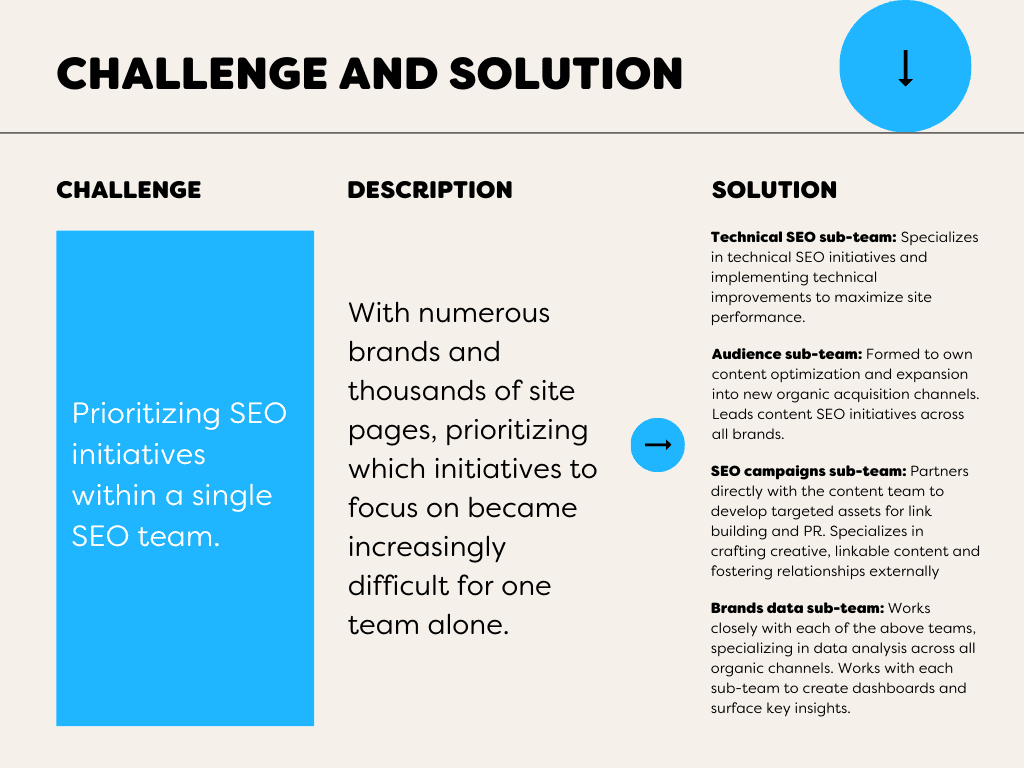

Challenge 1: Prioritising SEO initiatives within a single SEO team

Until a year and a half ago, we were structured as one centralised SEO team responsible for everything related to technical SEO and content SEO.

With numerous brands and thousands of site pages, prioritising which initiatives to focus on became increasingly difficult for one team alone.

For example, technical migrations for Brand A competed for resources with content expansion for Brand B, which meant there were opportunity costs on where our attention needed to be focused.

Clearly, this structure wasn’t working.

Solution: Specialised SEO sub-teams

We evolved from having one centralised SEO team to specialised sub-teams dedicated to specific disciplines, such as technical SEO, content SEO, and offsite campaign development.

Our structure is now as follows:

- Technical SEO team: Specialises in technical SEO initiatives and implementing technical improvements to maximise site performance. Works closely with our product team to prioritise and develop new site features that enhance our organic presence.

- Audience team: Formed to own content optimization and expansion into new organic acquisition channels. Includes leading content SEO initiatives across all brands, as well as identifying and leveraging emerging organic channels to capitalise on opportunities to attract new audiences.

- SEO campaigns team: Partners directly with the content team to develop targeted assets for link building and PR. Specialises in crafting creative, linkable content and fostering relationships externally, enabling us to expand our backlink profile and improve the authority of our brands.

- Brands data: Another new sub-team that works closely with each of the above teams, specialising in data analysis across all organic channels. Works with each sub-team to create dashboards and easily surface key data and insights.

This shift to specialised sub-teams has helped our SEO function have clear ownership of initiatives, fixing the challenge posed by one team juggling everything. Now, each sub-team has its own dedicated specialists, who are empowered to test and iterate within their channels to accelerate growth across all areas of organic acquisition.

Although each sub-team has a distinct focus, we have a collaborative culture where we regularly provide feedback and work together to amplify initiatives that benefit all four channels.

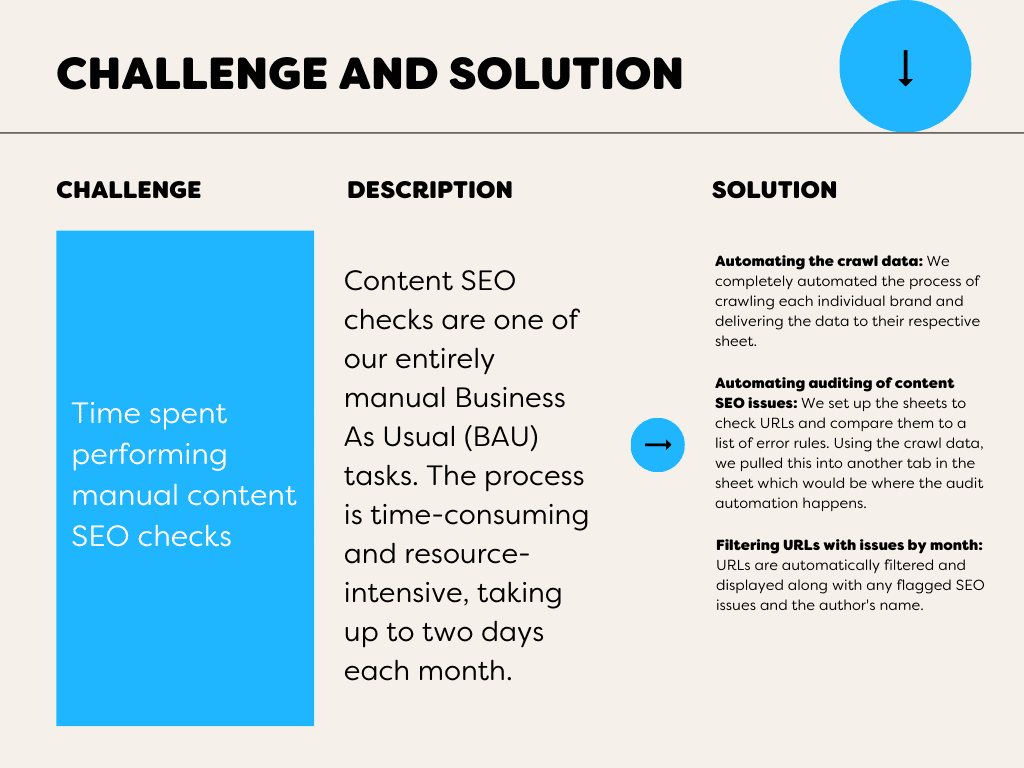

Challenge 2: Time spent performing manual content SEO checks

Content SEO checks were one of our entirely manual Business As Usual (BAU) tasks. The process was time-consuming and resource-intensive, taking up to two days each month and involving multiple team members. This process took time away from higher-priority tasks that brought value.

Our content SEO checks are essentially audits of all the new pages published. We would analyse the on-page SEO of URLs for issues, which we would then fix. Ultimately, our outcome is to optimise articles as much as possible before publishing, which makes content SEO checks vital as part of our BAU processes.

These were the on-page areas we would check for issues per brand:

- Title tags

- Meta descriptions

- H1s

- URLs

- Internal inlinks

- Internal outlinks

Before automation, every single step of the process was completed manually by the SEO team.

The steps included:

- Manually crawl each individual brand website with our dedicated crawling tools

- At the beginning of every month, we crawled each brand individually.

- Exporting large datasets to sheets

- Exporting crawl data to sheets, which could be challenging. Some datasets were so extensive that they could overwhelm and crash the sheets doc.

- Sorting through the data to detect issues

- After extraction, our next task was sorting the data. This involved:

- Arranging entries by date.

- Creating a dedicated sheet for internal and outlinks. This helped us in streamlining the process and accurately assigning the number of in-content links for each brand.

- Formatting the issues into a report to send to each brand stakeholder

- The report was then sent to each brand content team, with a combination of the SEO team performing the fixes as well as the content managers. This lengthy process often took 2 full days per month.

- Perform fixes on areas ourselves

- We would fix certain areas ourselves per brand.

Solution: Automated content SEO checks

Our system now helps our teams monitor published content and identify trends in issues. We can also dive further to see if it’s an anomaly or a trend based on our past data.

With Sitebulb, you can easily compare your latest audit data with previous audits and dig into the change history for any metric. Try Sitebulb for free now

This is how we went about automating the on-page SEO checks process.

How we automated our monthly content SEO checks

We made separate spreadsheets for each brand so they could see their own data without distractions.

We built a tab where their website crawl data is entered through scheduled crawls. We also made a master tab that audits all the URLs. Additionally, we added a ‘latest month’ tab that filters content by date and sorts it by author.

Automating the crawl data

We first needed to completely automate the process of crawling each individual brand and delivering the data to their respective sheet.

With Sitebulb Cloud, you can schedule regular crawls with the touch of a button, even on massive sites. Learn more about: Sitebulb Cloud

We first collaborated with our SEO team to migrate an instance of our crawling tool to the cloud. This enabled us to schedule crawls each month for every brand and automatically export their data to their dedicated sheets, including the custom fields we needed.

The sheet would then only extract the indexable, working URLs we needed so only the relevant content was audited.

Using QUERY formulae filtering on parameters like indexable URLs, each sheet would have the newest data with every crawl.

Our SEO team created automatic solutions for tallying internal links. These solutions are run automatically on different sheets that are updated with new crawls. The figures are then added to each URL in the sheet automatically.

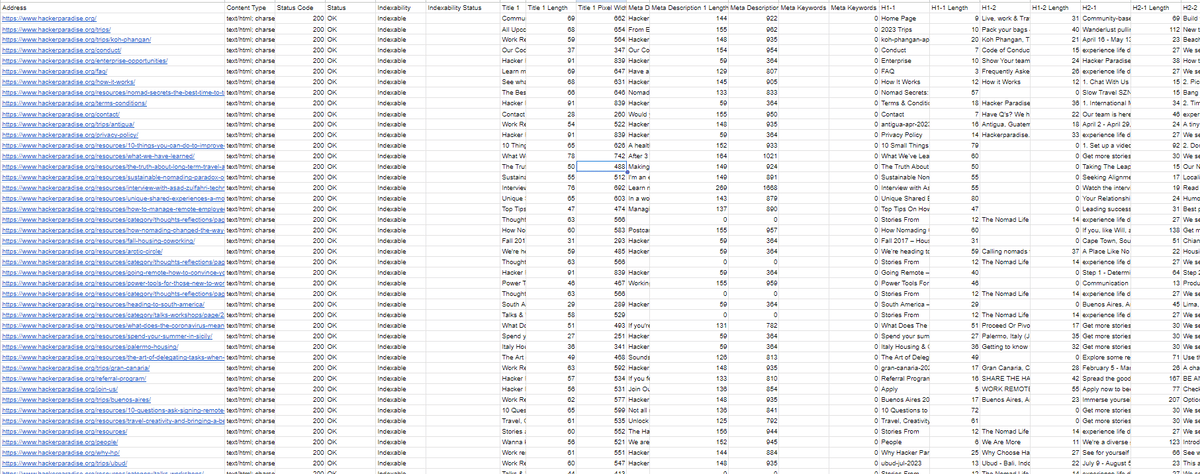

This helped us have on-demand access to clean, filtered crawl data – which you can see below. Beautiful, and completely automated!

The SEO team found it helpful, but the content team struggled to understand the issues in this format. Which is what I’ll get to now!

Automating auditing of content SEO issues

After creating the sheet and automating the crawl data, we needed to automate finding on-page SEO errors on the crawl URLs.

We set up the sheets to check URLs and compare them to a list of error rules. Using the crawl data, we pulled this into another tab in the sheet which would be where the audit automation happens. This is called the ‘Master tab’.

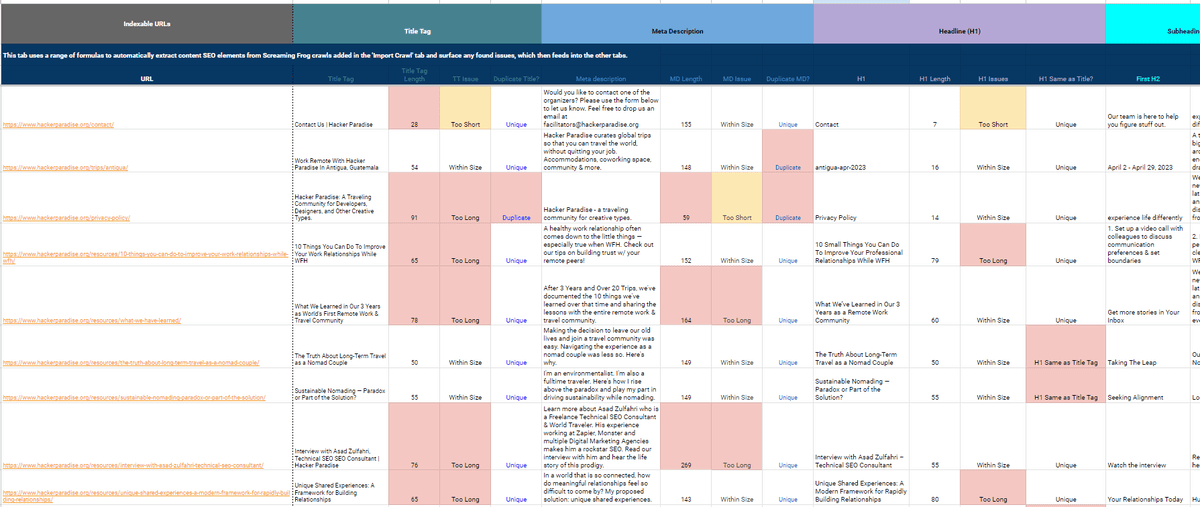

Here is the ‘Master tab’ screenshot using dummy data:

The tab pulls in all of the URLs into Column A using a QUERY formula. We created columns for their corresponding on-page elements like title tags and meta descriptions, which automatically populate alongside in their own columns using more specific QUERY formulas to match the URL in its row.

Next to each on-page element, we would have formula rules programmed to define ‘errors’ on each. These would be how we audit for issues.

We built conditional formatting formulas identifying errors to be red if significant or yellow if slight error.

For example, we would take a simple check, like measuring the length of elements such as title tag and meta description. Based on the outcome, we employed an IF formula to label the title accordingly, i.e. "too short," "within size," "too long," or "missing." This mechanism was similarly applied to meta descriptions and H1s.

=IF(A5="","", IF(C5<30, "Too Short", IF(C5>60, "Too Long", IF(C5=0, "MISSING", "Within Size"))))

The sheet also checks all title tags in the column for 'Duplicate title tags' and outputs based on this formula:

=IF(A5="", "", IF(COUNTIF(B:B, B5)>1, "Duplicate", "Unique"))

We can count the issues on the content and create graphs that flag the site's content SEO issues.

We could now see ‘failing’ URLs site-wide, and how many issues were present across all of our content. With every crawl, these views would update to reflect the fixes and the presence of any new issues.

While the master tab provided an overview of site-wide issues, this wasn’t helpful when sharing the sheet with content teams.

Our goal was to decrease the issues in newly published content each month, so looking at the whole site wasn't useful. To address this, we created ‘month’ tabs filtering by publish date and author.

Filtering URLs with issues by month

URLs are automatically filtered and displayed along with any flagged SEO issues and the author's name.

This organised list is then shared with the brand’s content team. Now the content team can view reports contextualised to only their latest monthly content, which helps them to quickly resolve the issues.

The next priority we faced was to train content teams on understanding the sheet, as well as being responsible for the content SEO areas within their existing workflows.

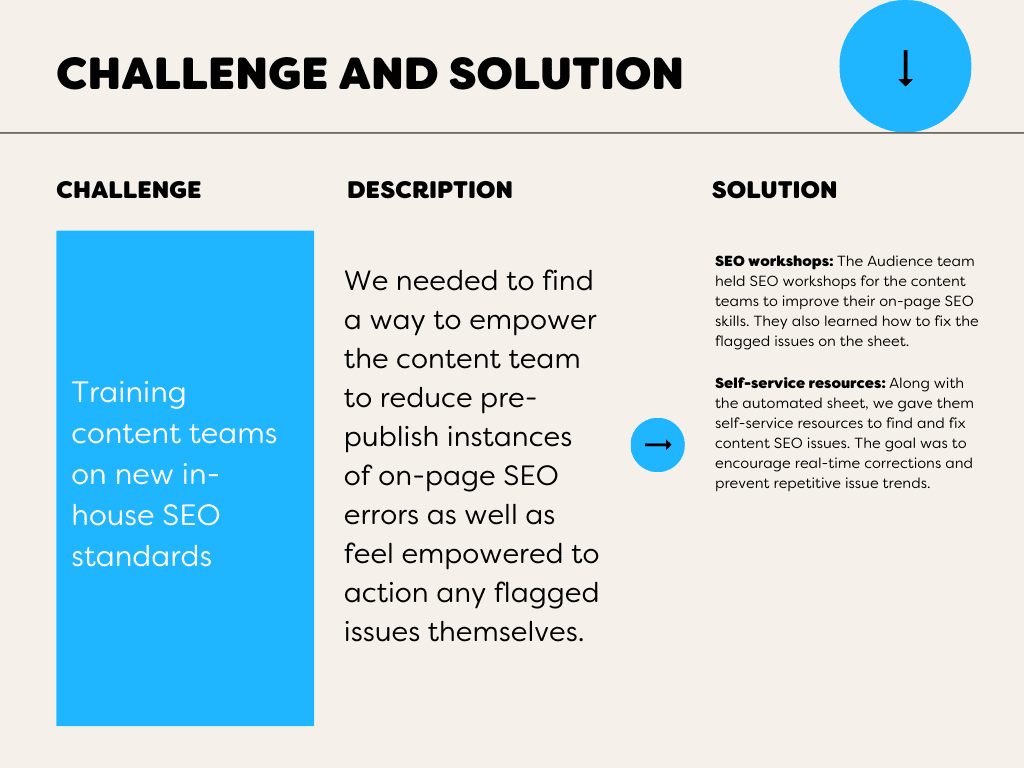

Challenge 3: Training content teams on new in-house SEO standards

We could now monitor and track our SEO on-page issues much more efficiently than before with the new automated sheet.

However, the main root issue remained - we were still finding trends of repeat on-page issues when completing checks across all of our sites. We weren’t able to resource all the fixes ourselves due to the volume of pages across every single brand.

We needed to find a way to empower the content team to reduce the instances pre-publish as well as feel empowered to action any flagged issues themselves.

Solution: Content SEO workshops

The Audience team held SEO workshops for the content teams to improve their on-page SEO skills. They also learned how to fix the flagged issues on the sheet. This training is now included as part of the onboarding process for new department members.

Rather than relying on the SEO team for content fixes, we empowered our writers to resolve these issues themselves, as part of creating and publishing content.

Along with the automated sheet, we gave them self-service resources to find and fix content SEO issues. The goal was to encourage real-time corrections and prevent repetitive issue trends.

The Results

Investing time in automating our content SEO process has really helped us to streamline manual, repetitive tasks. Additionally, it has allowed us to:

- Reduce content SEO issues by 63% month-over-month for one brand since deployment

- Save two work days for site crawling and report creation/delivery

- Equip our writers to independently resolve content SEO checks

- Curtail longstanding error trends since initiating automation

- Free up our teams to prioritise more strategic, high-impact initiatives

Here is a template for you to use!

Adjusting to the new processes took time, with early months showing high initial issues as brands acclimated. But regular automation created additional visibility, enabling more proactive efforts to reduce errors.

The automated system began in March 2023. The graph shows how one brand's issues changed over a period of 8 months. It uses a scoring system to measure success, with 100% meaning all SEO checks passed.

*Data from November 2023

As you can see, automation brought increased buy-in over time. The department gradually gained confidence in its ability to continually self-diagnose and improve their on-page SEO.

By October, we celebrated our first month with zero issues, for not one, but two of our brands, which was a significant achievement considering where we started!

What began as a band-aid solution transformed through standardisation and visibility – not just resolving errors reactively but proactively preventing the recurrence of issues.

Key learnings

The content teams wanted more feedback, so we increased how often the crawls ran. First, we moved from monthly to biweekly, and now we are moving to weekly.

For larger publishing businesses, a daily automation cadence is likely to be more suitable and allows for a better real-time sense check.

(Editor’s Note: Large-scale publishers can automate their on-page SEO audits using Sitebulb Cloud, a revolutionary cloud crawler with no project limits!)

Ultimately, we created a system where writers take charge of their content's SEO quality. This promoted a culture of ongoing improvement across our brands and meant our SEO team could redirect efforts to more impactful, valuable initiatives.

Responding to Google Algorithm Updates as a Publishing Business

While Google implements hundreds of updates to its search engine algorithm each year, the "core algorithm updates" are less frequent. These updates can significantly shift how Google ranks results. This makes algorithm monitoring essential for publishing businesses.

Algorithm monitoring involves tracking Google’s publicly announced changes to understand impacts to a site's visibility and performance.

Publishing sites are more prone to search ranking fluctuations from Google updates due to expansive content across verticals, and news sites have to contend with Google’s view of news content.

Each brand reacts differently to algorithmic assessments based on its verticals and editorial approaches. However, with heavy reliance on organic traffic through Google Search, Google News and Google Discover, algorithm impacts can significantly impact revenues.

To spot impact early on, publishers need to have clear visibility of their rankings and organic traffic. This helps to quickly identify and communicate any impact after algorithm changes.

At MVF, our post-update methodology mirrors industry best practices - assessing brand impact scopes, analysing findings, and communicating analysis and follow up actions internally.

Our previous approach to algorithm tracking was a manual process that took significant time. It involved a mixture of using different platforms such as Google Analytics, Google Search Console, Sistrix, and our rank tracking tools to extract and cross-stitch all of the data to understand the impact on brands.

This made it a slow process understanding intrinsically how we’ve been affected, and then developing an action plan.

Creating another automated system for algorithm monitoring has saved us a lot of time carrying out all of the above, and more.

Automating Algorithm Tracking with Multiple Brands

With our automated algorithm tracking dashboard, we've made algorithm monitoring more efficient and effective.

Since doing so, we have achieved:

- Streamlined and centralised data collection

- Easier and less time-consuming setup

- Improved stakeholder communication

- A holistic view of organic performance across all brands and competitors, which is understood by the wider business

Automating the Process

These are simplified steps for how we created a basic automated algorithm-tracking dashboard:

- We installed the Google Analytics add-on within Google Sheets to directly access Google Analytics data. For high-traffic sites, we use the GA 360 API to ensure complete and accurate session data that avoids sampling limitations.

- We applied intuitive date ranges to give insights into our performance within our regular reporting cadence:

- Day against same day last week for a view of immediate changes (e.g. Monday vs last Monday)

- Month-long range to monitor the algorithm rollout period. May need to be extended for longer rollouts (for the recent March 2024 core update would likely need a 2-month long period)

- The previous month for comparison:

- Schedule hourly refreshes of your reports to stay on top of near real-time changes.

- A year-ago version of data to compare then with now.

You can then import this data into Looker dashboards.

Having multiple data references to go by helped us illustrate performance and a true vision of how well our sites are doing.

- To level up visibility, we use APIs from our rank-tracking and visibility tools, so we could automatically see shifts in the performance of keywords or specific types of keywords.

- Extending this, we used the Sistrix API to monitor the visibility of our key competitors to see how they’re performing and benchmark our performance against theirs.

The Results

We’ve saved significant setup time by copying and adjusting automated dashboard templates instead of creating new ones from scratch. Set up now takes minutes, not hours. We can start tracking and analysing new updates right away.

In addition to streamlining our own workflow, automation has had another surprising but very valuable benefit. It has improved communication about algorithm updates to our department and our leadership, getting more of the wider business interested and caring about SEO performance.

Key Learnings

Our dashboards were complicated and confusing at first. They didn't show all the data, making it hard to see everything clearly. With updates and feedback from stakeholders, we worked on the boards to bring metrics people care about in a simple way, presented simply.

Now, stakeholders can self-serve the latest data whenever they want to see it and can understand performance. This has helped business and leadership better understand SEO and access insights easily.

Final Thoughts

This year has been a busy one. Now more than ever, we have needed to be more reactive, as things are changing all the time but we need to know where we should direct our attention.

We have achieved high ROI by creating several automated processes and freed up a lot of the team’s time to work on what’s important for the business.

You might also like:

Caitlin is an Audience Development Specialist at MVF, where she focuses on connecting brands with organic audiences and uncovering new channels for content exposure. She is passionate about finding novel and efficient ways to approach SEO workflows, and has made it her mission to help SEOs realize the power of automation and large language models.