From Proof of Concept, to Budget Release and Champers

Getting budget released for development work can be quite hard for an SEO Consultant (like myself) or Agency. Most of the time, a client has their budgets set well in advance for things like technical SEO, and so QA (Quality Assurance) changes aren't considered, or they are, but as an afterthought. Leaving you with little to work with or having to 'take' budget from other teams. This can be incredibly hard with eCommerce brands who tend to plan their development sprints around seasonal releases or product launches.

Disclaimer: I'm very much of the opinion that "Technical SEO" is a QA function and shouldn't sit in the Marketing Department. I think that's why it's so hard to get changes approved at times and why there usually seems to be friction between SEO and Dev. It can seem like two opposing teams when the two should work well together.

So how do you plan your approach? How do you pillage other departments’ development budget and keep people smiling? For me, I've seen the most success when I follow a strategic stepped approach.

The basics are:

- Here are the holes in your bucket;

- Look, your competitors don't have them;

- Here is how those holes affect performance;

- Here's something we did earlier and its resulting uplift; &

- Show me the money (or time).

Identifying the Technical Deficit - Finding the holes

When I do a Technical Audit, I tend to spend some time on a website first and look for problems with my eyes initially. Robots, sitemaps, canonicals, hreflang, URLs and of course a dig in GA & GSC. Whilst I'm doing that, I have Sitebulb whirring away in the background to support the audit.

Knowing the issues is half of the battle, of course, all tools can help identify problems but having a clear understanding of the impact of those problems is vital. There are broken internal links, great, report them. But why should time and effort be spent on fixing it? What are the commercial gains to making a change, or what are the risks of not fixing it? And further to this, what is the opportunity cost of fixing these over other items in the backlog?

When you have your Technical Audit report, you absolutely must add the answer to the inevitable "So what" when the client reads it. What is the loss created by this issue, and what is the potential gain from resolving it? It doesn't need to be a forecast or full-on business case, just something clear to show you can see past the "a tool told me to tell you", and you understand that the client will have to argue for the budget to make changes.

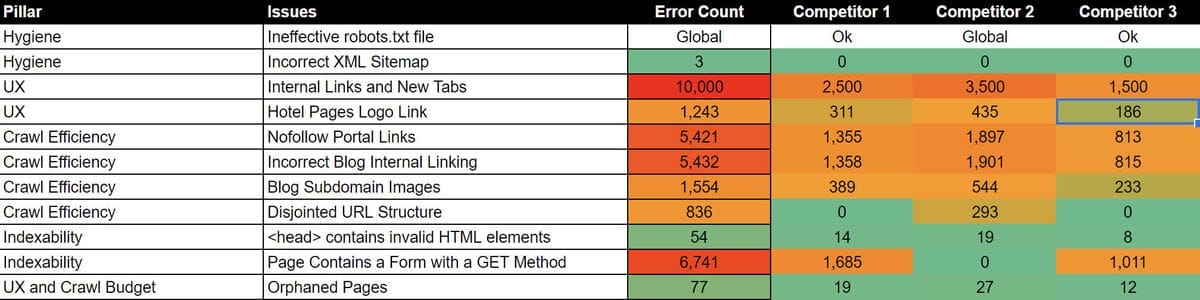

Compare to Your Competitors

Next, you need to be able to effectively prove to the client that whilst they have a problem you identified and showed risk or value against it, you need to show how a competitor is doing better than them. I tend to run a lighter audit on two or three key competitors and show a stripped-down list of the issues to show their 'standing' against the client's website on an issue by issue level.

This helps to impress upon people the potential scale of change needed to catch up or exceed a competitor. When you link this to rankings or overall visibility and commercial measures, it helps to paint a picture of the potential opportunities the client has to do better.

Measure, Measure and Measure a Little More

Knowing the issues is a big part of the puzzle, showing how a client performs across multiple measures and comparing that to competitors is critical. But what to measure? How and why is that measure more critical than others? Well, they are tough questions! The easy answer is to measure what matters to both clients and SEO. What matters to clients is virtually always linked to the bottom line. Commercial measures that can be reported on as direct or indirect linked numbers to that bottom line. What matters to SEO? I'll leave that argument to rumble on in Twitter… My feeling is that the answer, to use a typical SEO phrase is "it depends" on the client, the vertical and what I know will relate to your client.

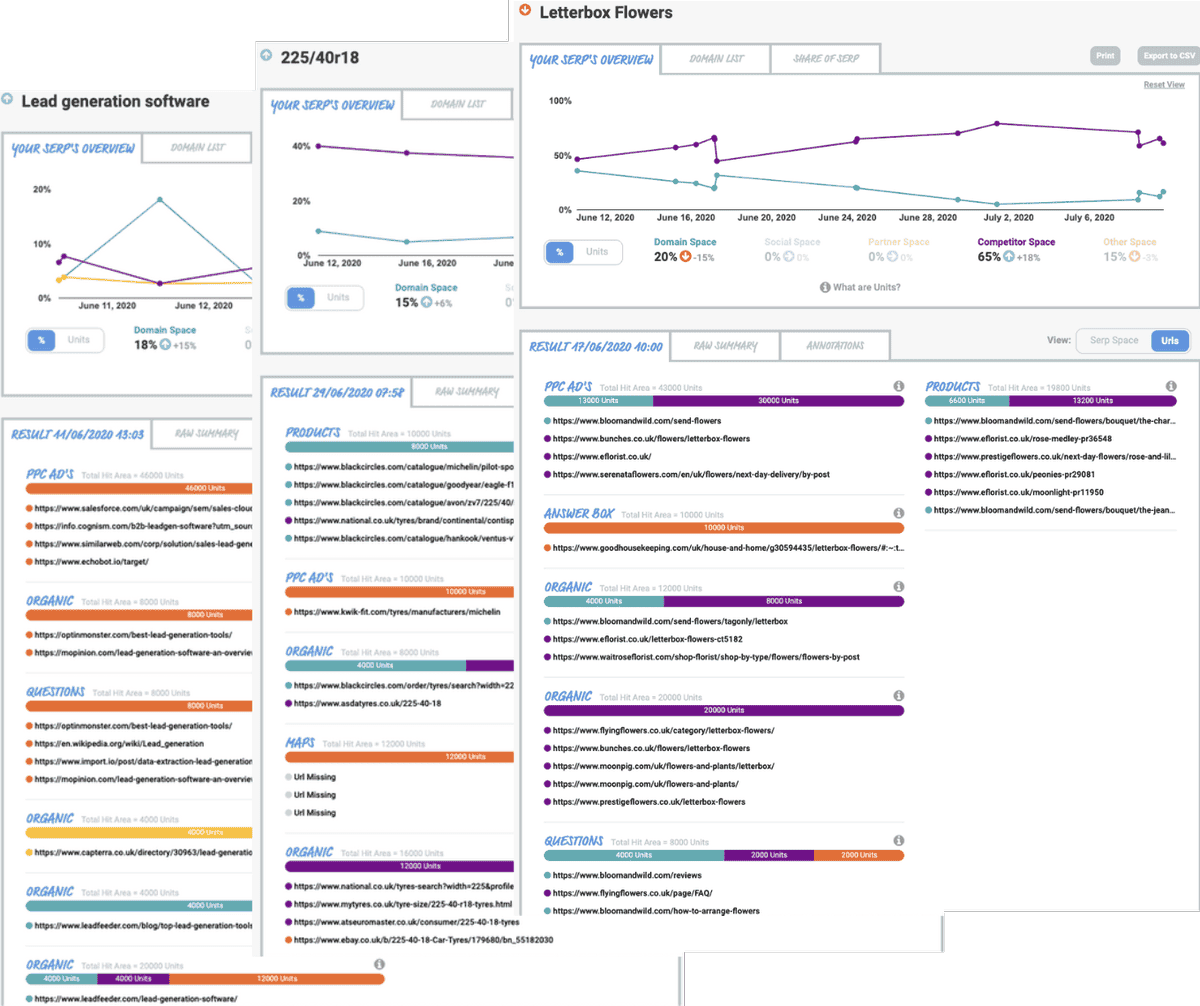

For me, that's why I co-founded SERPsketch as it helps stakeholders at almost every level of an organisation understand beyond fallible rank trackers. What does P1 mean? How do SERP features impact things? How volatile are the SERPs and SERP features? Linking these together with commercial measures supports client communication from Sale to Strategy and from Strategy to Deployment and Results.

Conventional rank tracking is fine to a degree, but to make the data meaningful, you need to know what the actual SERP looks like. Understand how an increase in ranking position may boost your visibility in standard tools, but in reality it might be overshadowed by a large P-A-A (People Also Asked) box or map pack. Viewing your data in this way will help you to model the impact of the SEO work done in a way that relates to actual traffic, engagement and then your bottom line.

Group Issues and Create Cases

Once you have your issues from the audit and know how they stack up against competitors, as well as a real understanding of the SERPs, connected to the bottom line, it's time to group them and build out business cases.

By grouping issues by the pillar of Technical SEO, you're more likely to be able to build a measurable case to get signed off, and you'll make a friend of a developer since they may be building out the remedy to linked tasks in one sprint. They like sprints that aren't all disparate.

For this post, and because it's a shiny new Sitebulb feature, I'll use Schema and Search Features in my example.

So let's say that in the audit you find, out of 10,000 pages there are 7,000 with Schema mark-up implemented, but 6,500 pages have the mark-up with validation errors. Ok so there are always opinions about how much value Schema mark-up can bring, but my feeling is that it can bring a lot. However, we all know that budget and resources aren’t signed off on feeling alone.

If you’re an SEO then you know that schema is great because you get added opportunity for content relevance to be understood, help the robots to understand the entities on a page and how they link to things the robot know to be accurate, and also help to earn potential SERP features (if you want that). Awesome. Unfortunately, not everyone gets that so let me show you how I’d get resource put towards implementing Schema validation fixes.

If we group Schema validation issues into one 'Pillar' we can then create a business case that helps to 'sell' the changes into the client and get that resource released.

We'd look at things like:

- Ownership % of the SERP space against competitors for keywords that are linked directly to the bottom line;

- CTR of the pages that rank for groups of terms you measure; &

- The conversion rate and user metrics that indicate how a page is received.

If you are measuring this in advance, great! If not, start BEFORE trying to sell in a deployment.

When you know the benchmarks of what a CTR and Conversion Rate look like based on the number of users, you can work that backwards to the % of the results you own. You can then with a level of reality that anyone can understand, calculate what an increase in X or Y will drive. If you look over a decent period, the calculations are more realistic. You're able to then show to the client that when a ranking position increases or decreases, the % of ownership impacts the CTR, which means a better or worse bottom line.

Prove it Can Work

It’s often not as easy as saying "We found this problem and our maths shows fixing it will make you more money". You need to be able to produce, where possible, a small scale Proof of Concept (PoC) that should help to evidence your maths.

With Schema validation errors the PoC, say 6,500 of them, you should then look at one of your groups of valuable keywords and the pages you're tracking against competitors. When you have a PoC group picked, work with whoever needed to deploy on a small scale.

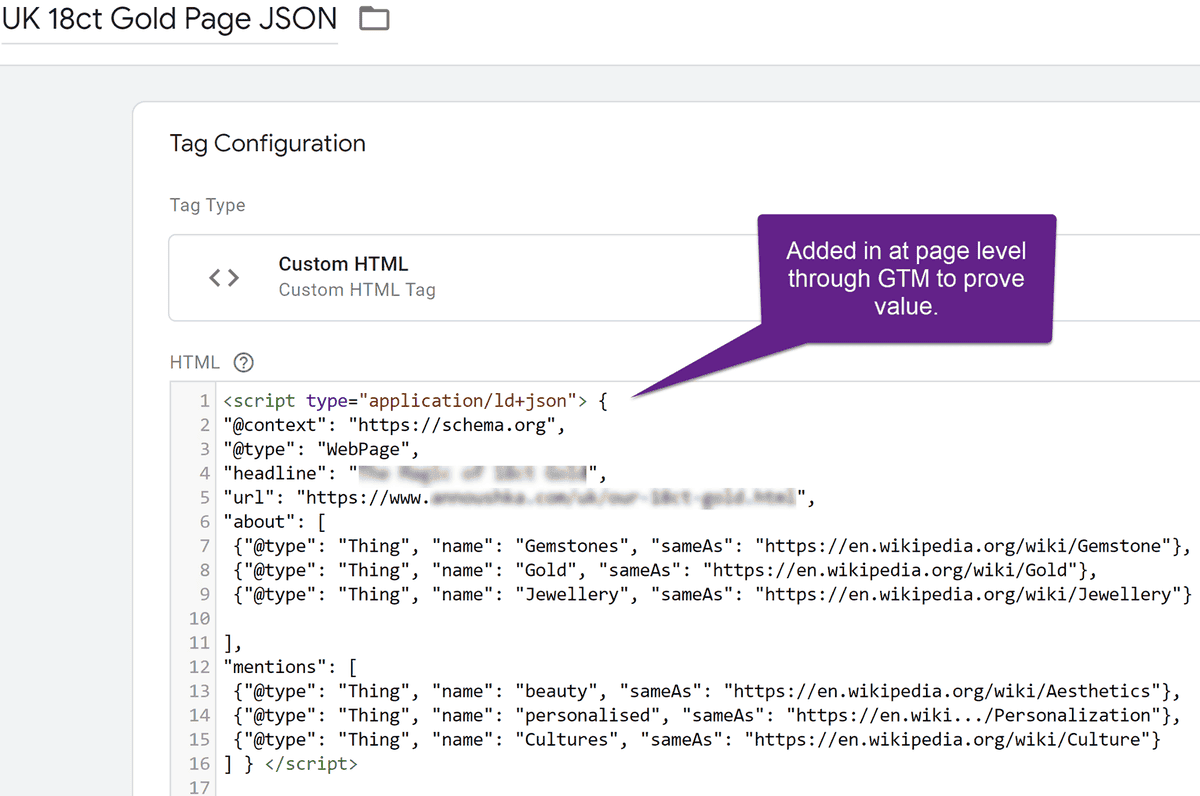

Wherever I can, I try to deploy fixes in GTM if it means getting something done to prove it works without needing to get resource signed off.

For example, I used GTM to deploy an entity based schema PoC to a category across a clients site. This was to show the impact before being deployed sitewide.

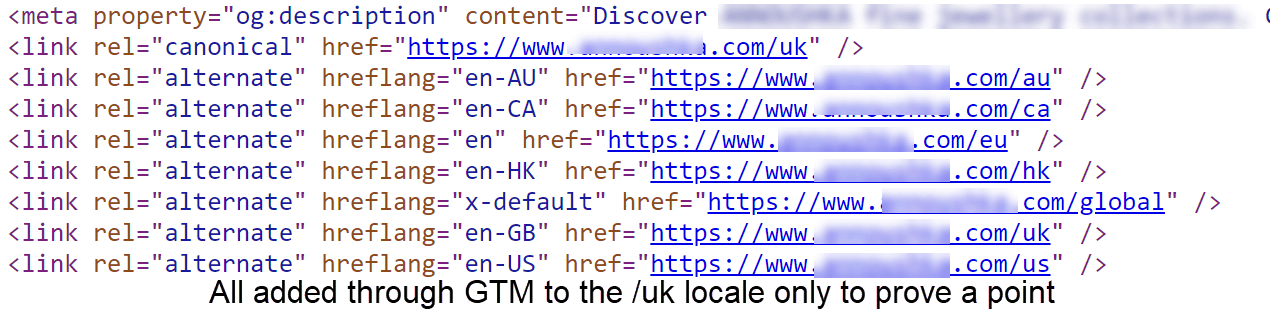

For a different client, I used Google Sheets and GTM to deploy an hreflang PoC on one locale to show the impact before implementing it across the rest of the site.

Focus on The Numbers

As soon as you deploy a PoC, tell your client and begin measuring the impact against the numbers you used in the business case - and annotate Google Analytics to help for future sign off on implementation. You've got a much better chance of getting some resource pinched from another department if you loop back to numbers everyone agreed and showed an uplift.

Revisiting the business case, showing the potential value of changes at scale based on your calculations then means that stakeholders are more comfortable asserting the need to reallocate resource. You've then got a team of advocates who'll help see things through.

Deploy the Changes at Scale and Measure

So you got the resource! YAY! Now the deployment at scale needs to be driven forwards. If you're working with a decent developer, they'll have a great scoping and deployment process. Stick with what they know works; it'll create good relationships and make sure you all share the resulting win.

As soon as things go live, pause other deployments that could impact the resulting measures. Regularly check the measures in the PoC and report back on changes, this shows transparency but also helps to keep traction and good communication while you're working on the next PoC.

Pop the Champers

You win the internet. The client is happy, and the bottom line is better, you get a warm fuzzy feeling and move onto the next problem to solve.

Enjoy the win.

Chris is the founder of The OMG Center, a digital agency accelerator business. With 10 years of leadership experience, Chris knows how to get things done. He's worked with clients across all levels and has been exposed to multiple layers in order for him to help you reach your goals as quickly or slowly as is right for YOU!

}

}